Augmented reality: the smart way to guide robotic urologic surgery

Abstract

The development of a tailored, patient-specific medical and surgical approach is becoming the object of intense research. In robotic urologic surgery, where a clear understanding of case-specific surgical anatomy is considered a key point to optimizing the perioperative outcomes, such philosophy has gained increasing importance. Recently, significant advances in three-dimensional (3D) virtual modeling technologies have fueled the interest in their application in the field of robotic minimally invasive surgery for kidney and prostate tumors. The aim of the review is to provide a synthesis of current applications of 3D virtual models for robot-assisted radical prostatectomy and partial nephrectomy. Medline, PubMed, the Cochrane Database, and Embase were screened for literature regarding the use of 3D augmented reality (AR) during robot-assisted radical prostatectomy and partial nephrectomy. The use of 3D AR models for intraoperative surgical navigation has been tested in prostate and kidney surgery. Its application during robot-assisted radical prostatectomy has been reported by different groups as influencing the positive surgical margins rate and guiding selective bundle biopsy. In robot-assisted partial nephrectomy, AR guidance improves surgical strategy, leading to higher selective clamping, less healthy parenchyma loss, and better postoperative kidney function. In conclusion, the available literature suggests a potentially crucial role of 3D AR technology in improving perioperative results of robot-assisted urological procedures. In the future, artificial intelligence may represent the key to further improving this promising technology.

Keywords

INTRODUCTION

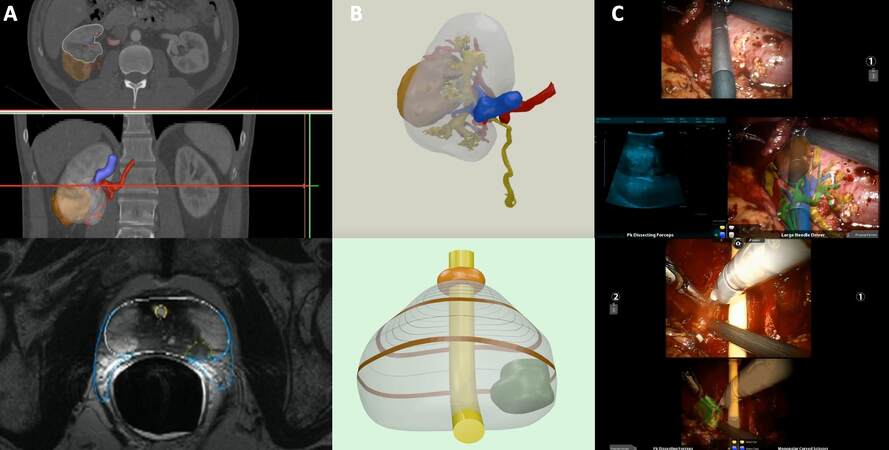

In the last decades, urologic surgery has been dramatically changed by the advent of robotic surgery, which represents the biggest innovation of the modern surgical era. The better visualization of anatomical structures and details[1] has allowed creating the concept of “precision surgery”[2]. Despite this technological improvement allowed by robotics, preoperative planning remains dependent on conventional bidimensional imaging [e.g., CT-scan or magnetic resonance imaging (MRI)], unable to provide space depth perception or accurate representation of anatomical details[3]. A valid tool to overcome the aforementioned limits and improve the anatomical comprehension of surgical field has been given by the three-dimensional (3D) model reconstruction that has gained growing popularity among urologists. Hyper-accurate 3D virtual reconstruction entered the scenario starting from the automatic reconstruction gained by popular radiological software linked to technological advances and a strict collaboration between bioengineers and clinicians[4]. In the wide field of options offered by many software and companies, the main steps to building these models are often very similar. First, high-quality bidimensional digital imaging and communication in medicine images need to be acquired (e.g., CT with < 3 mm slices). Subsequently, a manual process is performed to segment the images in order to obtain the different anatomical structures of the target organ. After this crucial step, the virtual model format can be tailored according to the compatibility of any electronic device, or printed using several materials[5]. Besides these applications, in the last years, several new technologies have been developed for the purpose of overlaying 3D models over the real anatomy to increase the surgeon’s awareness of surrounding operative field, starting to explore the augmented reality (AR) setting [Figure 1]. Concerning the urology surgical setting, this new technology has gained popularity, especially during robotic procedures with promising results in terms of quality of care, surgical perioperative outcomes, patients selection, and counseling[5-7]. The present narrative review aims to offer a comprehensive overview of the current and future applications of AR during robot-assisted urological surgery.

Figure 1. Building process of a 3D virtual reconstruction from standard bi-dimensional images and its application during an augmented reality procedure for both kidney and prostate tumor. (A) High-quality images (e.g., CT with < 3 mm slices) are processed manually using dedicated software, outlining the different portions of the organ and tumor in accordance with their radiological characteristics. (B) At the end of the process, the virtual model can be rendered and exported in pdf format. (C) Integration of the 3D virtual reconstruction in the da Vinci surgical console via TilePro, allowing the augmented reality procedure. During robot-assisted partial nephrectomy, the intraoperative US confirms the tumor location as indicated by the 3D virtual model superimposed onto the real anatomy. During robot-assisted radical prostatectomy, the tumor is overlayed over the bundle to perform selective biopsy. 3D: Three-dimensional; US: ultrasound.

EVIDENCE ACQUISITION

A non-systematic review of the literature was conducted. The search engines Medline, PubMed, the Cochrane Database, and Embase were used to look for clinical trials, randomized controlled trials, review articles, and prospective and retrospective studies assessing the role of 3D AR during robotic urologic procedures.

EVIDENCE SYNTHESIS

Since its introduction, 3D AR technology has been primarily used in urology to enhance preoperative planning and even more the intraoperative navigation. As mentioned above, AR refers to the superimposition of virtual objects (as 3D reconstructions) over the real environment (e.g., the operative field) in real time[8]. Once a 3D model reconstruction is obtained, some other pivotal steps are required to perform AR. First, it is necessary to record the images of the real operative field (e.g., as shown by the endoscopic camera). The subsequent step consists in the simultaneous tracking of any movement of the target organ and surgical tools. Finally, the images are merged together, allowing the user to visualize an enhanced operative field[9]. Considering robotic setting, AR has been tested especially during radical prostatectomy and partial nephrectomy. The main findings are reported in Table 1. In the following paragraphs, an overview of the available experiences of AR is provided and discussed in detail.

Main experience with 3D Augmented Reality navigation for robotic urologic surgery

| Author, journal, year; reference | Type of study | Setting (Surgical planning, indication, training, intraoperative guidance) | Number of cases | Outcomes evaluated | Main findings |

| Thompson et al., BJU Int, 2013; [10] | Prospective feasibility study | Intraoperative guidance | 13 patients | Improvement of clinical outcomes with 3D guidance | The surgeons found the system to be a useful tool for reference during surgery even if no measurable outcomes were improved by its application. |

| Porpiglia et al., Urology, 2018; [11] | Prospective study | AR intraoperative guidance | 30 patients | Effectiveness of AR system during RARP | AR system revealed to be safe and effective. The accuracy of 3D reconstruction seemed to be promising for intraoperative navigation |

| Porpiglia et al., BJU Int, 2019; [12] | Prospective study | Surgical planning and intraoperative guidance | 30 patients (11 without ECE, 19 with ECE) | Usefulness of HA3D models and AR to improve effectiveness RARP | HA3D models and AR system overlapped during AR-RARP can be useful to identifying tumors’ extracapsular extension |

| Porpiglia et al., Eur Urol, 2019; [13] | Prospective study | Intraoperative guidance | 40 patients (20 3D group and 20 2D control group) | Usefulness of 3D elastic AR to identify capsular involvement | The introduction of 3D elastic AR system may be useful to reduce of positive surgical margin rate and to improve the functional outcomes |

| Schiavina et al, Eur Urol Focus, 2021; [14] | Prospective study | Surgical planning and intraoperative guidance | 26 patients | Usefulness of 3D AR system to perform tailored NS | 3D AR system can influence surgical strategy for nerve-sparing during RARP |

| Wake et al., Urology, 2018; [15] | Video article | Surgical planning and intraoperative guidance | 16 patients | Usefulness of 3D models | 3D printed and AR models can influence surgical planning decisions and be useful intraoperatively |

| Porpiglia et al., Eur Urol, 2020; [16] | Retrospective study | Intraoperative guidance | 91 patients (48 3D group and 43 US group) | Subjective accuracy of 3D static and elastic AR system | HA3D models overlapped to in vivo anatomy during AR-RAPN for complex tumors can be useful for identifying the lesion and intraparenchymal structures |

| Schiavina et al., Clin Genitourin Cancer, 2020; [17] | Prospective study | Surgical planning and intraoperative guidance | 15 patients | Usefulness of 3D models to perform selective clamping | The use of AR may be useful to improve the intraoperative knowledge of renal anatomy with higher adoption of selective and super-selective clamping approaches |

| Kobayashi et al., J Urol, 2020; [18] | Prospective study | Surgical system testing | 53 patients (21 non-SN and 32 SN group) | Surgical technique assessment | The SN system with 3D model in VR is effective and ay improve surgical skills by reducing the connected motions of robotic forceps |

| Michiels et al., World J Urol, 2021; [20] | Retrospective study | Surgical planning and intraoperative guidance | 645 patients (230 3D-IGRAPN and 415 controls) | Complication rate | Three-dimensional kidney reconstructions lower the risk of complications and improve perioperative clinical outcomes of RAPN |

| Kavoussi et al., J Endourol, 2021; [21] | Validation study | Intraoperative guidance | 2 phantom kidneys | Technology validity | The presented technology is easily integrated into surgical workflow |

3D AR guidance during robot-assisted radical prostatectomy

One of the first AR experiences during robot-assisted radical prostatectomy (RARP) was reported in 2013 by Thompson et al.[10]. They built and evaluated an intraoperative navigation system overlaying T2-weighted MRI images during surgery. After a preclinical assessment, this technology was used during 13 standard RARPs, evaluating the surgeon’s feedback.

Some years later, Porpiglia et al. published their initial AR experience by using a specifically developed software, named pViewer, in which the 3D models were loaded on a dedicated AR platform[11]. In this way, it was possible to reproduce the displacements of the target organ during the surgery. To improve the aid given to the surgeon, it was possible to modify the transparency of the 3D model to show the hidden anatomical details of the organ or the tumor’s margins. Thereafter, the AR platform combined the receiving stream from the endoscopic video of the robotic camera with the 3D model images, and the resulting stream was sent back to the remote console using Tile-pro™. Finally, on the console screen were projected the original intraoperative images and the overlapped images, which were continuously moved and adjusted by a dedicated assistant. In this preliminary study, 16 patients undergoing full nerve-sparing RARP for a stage cT2 prostate cancer were compared with 14 patients with cT3 tumors (assessed at MRI) who underwent standard RARP with selective bundles biopsies at the level of suspected extra-prostatic extension. Overall, the positive surgical margin (PSM) rate was 30%, but no PSM was found in pT2 tumors. Selective bundles biopsies performed with AR assistance confirmed extracapsular disease in 11/14 patients (78%). These preliminary data were then confirmed in an update published in 2019[12]. In this study, the selective biopsies at the level of the neurovascular bundle correctly identified the extra-prostatic extension in 75% of cases.

To improve the 3D models’ realism and organ deformations occurring during the intervention [e.g., during the nerve-sparing (NS) phase], the same group developed a new 3D elastic model[13]. By using non-linear parametric deformation along the two main axes, these new models were able to replicate the deformation of the prostate during the surgical procedure. The accuracy of this technology was tested by the positioning of a metal clip on the prostate surface at the level of the target lesion, identified thanks to the elastic 3D model in an AR setting. In all patients, the lesion was correctly identified by the model with 100% accuracy.

In 2021, Schiavina et al. reported their experience with the 3D AR guidance during RARP to intraoperatively modulate the nerve-sparing phase of the procedure. 3D AR technology was found to be useful for improving the real-time identification of the index lesion and the subsequent modulation of the nerve-sparing approach in approximately 30% of cases[14]. Positive surgical margins rate at the level of index lesion was 11.5%, and the erectile function recovery was 65% at six months in patients referred to NS RARP. Moreover, the choice to move towards a more conservative nerve-sparing under 3D AR guidance was revealed to be safe (no PSM) in more than 83% of cases.

3D AR guidance during robot-assisted partial nephrectomy

There is a growing need for a nephron-sparing surgery, claimed for a progressive exploration of 3D AR assistance role by several groups, with the intention to assess its help during the surgical intraoperative navigation.

The impact on the decision-making process was recently evaluated by Wake et al., who reported their experience combining 3D printing and the AR method for image visualization, performing 15 robot-assisted partial nephrectomy (RAPN)[15]. They showed the value of this tool, giving a proper and safe lead to surgical planning. The major limit, as described by the authors, was the need for diverting the attention of the main surgeon while consulting the model on an external device (e.g., Microsoft HoloLens).

In 2020, Porpiglia et al. published a comparative case-control study evaluating 3D AR guidance vs. intraoperative ultrasound (US) guidance[16]. In this case, the virtual model was manually overlapped by a dedicated assistant to the endoscopic view of the da Vinci robotic console and displayed using Tile-pro™. In this way, the surgeon remained focused on the surgery without distractions. In total, 91 patients burdened with highly complex renal masses were included in this study (48 in the 3D AR group and 43 in the US group). The 3D AR assistance was more effective than standard intraoperative US, with higher rates of selective clamping and pure enucleation techniques (62.5% vs. 37.5%, P = 0.02). Moreover, the 3D group showed lower collecting system violation rate (10.4% vs. 45.5%, P < 0.001). Concerning the functional assessment, the US group registered a higher reduction of renal function compared to the 3D group, as assessed by renal scan (P = 0.01).

A 15-patient study carried out by Schiavina et al. rigorously reproduced both AR technology and the study design in performing 3D AR RAPN[17]. Their findings confirm the important role of 3D models in improving the comprehension of vascular anatomy, allowing the surgeon to perform a selective or super-selective clamping in 80% of cases. These choices were concordant in 87% of cases with the preoperative planning depending on the 3D model’s consultation.

The value and impact of a 3D virtual reconstruction-led RAPN have been well examined by two studies from Kobayashi et al. The first study[18] reported the authors’ first experience with a surgical navigation (SN) system able to overlap and integrate endoscopic images with 3D virtual models while performing RAPNs. Particular attention was given to the skills of two expert surgeons on the handling of the renal artery using (SN group, 32 patients) and not using (non-SN group, 21 patients) the system. The results show how this technology significantly improved the dissection time (16 vs. 9 min in non-SN and SN groups, respectively, P < 0.001) as well as the reduction of inefficient motions (“insert”, “pull”, and “rotate”, all P < 0.03). Furthermore, the improvement in connected motions was positively associated with SN for both surgeons.

The second study[19] was a retrospective analysis of RAPNs performed from 2013 to 2018 with (102 patients) and without (44 patients) the help of the SN system. This study aimed to evaluate the feasibility of image-guided surgery during RAPN and the association between SN and the renal parenchyma volume after surgery. The final grouping resulted in 42 patients for each arm. The use of SN showed higher parenchymal volume preservation rates (90.0% vs. 83.5%, P = 0.042), thus demonstrating a potential preservation of the renal function after surgery.

Furthermore, Minchiels et al. discussed the importance of 3D virtual reconstructions during nephron-sparing surgery[20]. Their wide multicenter score-matched analysis included 645 patients who underwent RAPN, of which 230 were performed under 3D guidance. Notwithstanding a larger tumor size and higher surgical complexity in the 3D group, the 3D model guidance resulted in a lower rate of major postoperative complications (3.8% vs. 9.5%), lower estimated glomerular filtration rate (eGFR) variation (-5.6% vs. -10.5%), and higher rate of trifecta (i.e., negative surgical margins, 90% eGFR preservation, and absence of perioperative issues) achievement (55.7% vs. 45.1%). Despite the huge number of 3D guided procedures with a control group included by the authors, which is definitely a strength of the study, their results might mislead to a limited generalization since they analyzed procedures from three expert high-volume surgeons, and there was a lack of standardization in perioperative management among the centers.

New perspectives: automatic guidance with 3D virtual reconstructions

As experienced before, the primary limit of 3D technology can be found in the need for a dedicated assistant to control the precise overlapping. Some scenarios have been proposed to overcome this limit. An interesting idea was offered by Kavoussi et al.[21]. They developed a touch-based registration, allowing them to automatically anchor the 3D model. This setup was able to track intraoperatively the tip of a robotic gear touching the surface of the kidney, allowing the creation of a 3D surface point set that could be matched to a previously prepared patient-specific model. The authors performed RAPN with their intraoperative navigation system on two phantom kidneys, reproducing on one an exophytic tumor and on the other an endophytic tumor. Landmarks were marked on the phantom organ using a surgical pen and then identified with the robotic manipulator after the initial registration. The 3D model could be shown to the surgeon on the console during the resection, owing to a purposeful picture-in-picture visualization system. The re-registration made after the resection was conducted by the re-identification of the landmarks. Moreover, the difference between the registration made before and after the resection was compared. The average RMS (root mean square) of TRE (target registration error) was, respectively, 2.53 and 4.88 mm in endophytic and exophytic phantoms, confirming a good accuracy of this technique. Furthermore, the resections following preplanned contours were made possible thanks to the intraoperative leading system. After the resection, the re-registration was conducted with small effect on TRE.

Amparore et al. aimed to develop a fully automated overlapping of 3D reconstructions using AR. As well documented in their preliminary experience[22], they followed the path of computer vision algorithms, for the identification of fiducial points that could be matched on virtual models. Because of the high homogeneity of colors of the operative field, the authors decided to use fluorescence imaging technology [near-infrared fluorescence (NIRF) Firefly system, integrated with the da Vinci platform]. In this way, the whole kidney, appearing as a bright green figure surrounded by a dark field, became the marker for the software. Once the kidney was detected, the software automatically overlapped the 3D model over the endoscopic view [Figure 2]. The kidney’s rotation was calculated, seeing the clear capsular curve or, at least, a portion of it, completed by the virtual borders overlapped on the detected curve. An experienced assistant could refine the matching, when necessary, using a mouse.

Figure 2. Indocyanine green-guided automatic augmented reality RAPN. With the kidney fully exposed from the perirenal fat, a solution of ICG is injected intravenously, allowing the Firefly camera to visualize the kidney, which appears as a bright green organ surrounded by the dark operative field. Subsequently, dedicated software based on computer vision technology automatically anchors the 3D virtual model to the real organ. RAPN: Robot-assisted radical prostatectomy; ICG: indocyanine green; 3D: three-dimensional.

As soon as the registration was achieved, the surgeon could switch to normal vision, maintaining the superimposition of the 3D model over the real anatomy, and proceed with the intervention, i.e., the resection of the renal mass. Of note, all the overlaps executed were correct. Notwithstanding the only preliminary experience, the trial showed promising results for the advent and use of “automatic” guidance in AR.

In the near future, the application of deep learning algorithms and artificial intelligence could represent a step further in enhancing AR guidance[23,24]. Thanks to their ability to manage a large number of data, the quality assessment of geometrical features for 3D recognition may improve considerably, as already reported in other settings[25].

These new fields of research could therefore represent the turning point in the surgical intraoperative navigation by improving accuracy and precision of 3D AR guidance.

Previous 3D AR guidance reviews

Some authors have already performed systematic and non-systematic reviews in this field. In 2018, Bertolo et al. reported the first available experiences with AR technology in different urological settings[26]. Similarly, Roberts et al. recently reviewed the different applications of AR according to the type of surgical procedures, reporting increased registration precision and ability to detect organs boundaries and better surgical outcomes in selected sceneries[27]. The novelty of our review relies on its subtopic: robotic surgery. In fact, our aim is to provide a comprehensive review of the current applications of AR in the most innovative surgical approach, reporting the available strategies to increase the accuracy of surgical robotic procedures and perioperative outcomes.

CURRENT LIMITS AND FUTURE DIRECTIONS

Notwithstanding the promising results of 3D AR guidance in robotic urologic surgery, this technology is still limited by different aspects. The first limit is given by the small number of enrolled patients in the scientific literature, often even without a control group. Then, the analysis among different experiences is unfair due to the different approaches and methods to build the 3D models other than the way to use it as an intraoperative guidance. It follows as a shortage of uniformity in methodology, a wide variety of software and technology used, leading then to an infinite array of accuracies and costs. The lack of standardization is made even harder by the continuous evolution of this technology. International shared guidelines among surgeons and medical engineers could be the key, in the future, to overcome the mentioned issues, following the path for achieving the standard of quality of 3D reconstructions in order to have a full analysis of its use in clinical practice. Concerning the outcomes investigated, they are usually focused on perioperative results (blood loss, ischemia time, perioperative complications, etc.) with few reports on patients’ quality of life after the intervention. Since this assessment has a pivotal role in fully evaluating patient outcomes, it should be mandatory to add this aspect to future investigations.

Another trivial point, as always discussed around the emerging technology, is about costs; this case does not make an exception. Due to the already mentioned lack of standardization, the actual costs per model are not reliable. Moreover, the ongoing evolution and introduction of new tools are making the effective assessment of the expected cost even harder.

CONCLUSIONS

The available experience with 3D AR platforms to enhance robot-assisted urologic procedures has shown interesting and promising outcomes. Despite some indisputable limitations, AR guidance has improved the surgeon’s awareness of the operative field, improving the perioperative results in both kidney and prostate surgery without compromising patients’ safety. In the near future, progressive technological development, the creation of standardized protocols to create 3D models and perform AR, and the increase in prospective controlled research could further ameliorate and clarify the role of 3D AR guidance during robotic procedures.

DECLARATIONS

Authors’ contributionsProtocol/project development: Manfredi M, Piramide FData collection or management: Amparore D, De Cillis S, Piana A, Busacca G, Colombo M, Meziere J, Granato SData analysis: De Cillis S, Piramide F, Piana A, Checcucci E

Manuscript writing/editing: Piramide F, Burgio M, Manfredi M

Supervision: Fiori C, Manfredi M, Porpiglia F

Availability of data and materialsNot applicable.

Financial support and sponsorshipNone.

Conflicts of interestAll authors declared that there are no conflicts of interest.

Ethical approval and consent to participateNot applicable.

Consent for publicationNot applicable.

Copyright© The Author(s) 2022.

REFERENCES

1. Zargar H, Zargar-Shoshtari K, Laydner H, Autorino R. Anatomy of contemporary partial nephrectomy: a dissection of the available evidence. Eur Urol 2015;68:993-5.

2. Autorino R, Porpiglia F, Dasgupta P, et al. Precision surgery and genitourinary cancers. Eur J Surg Oncol 2017;43:893-908.

3. Ficarra V, Caloggero S, Rossanese M, et al. Computed tomography features predicting aggressiveness of malignant parenchymal renal tumors suitable for partial nephrectomy. Minerva Urol Nephrol 2021;73:17-31.

4. Porpiglia F, Bertolo R, Checcucci E, Amparore D, Autorino R, Dasgupta P, et al. Development and validation of 3D printed virtual models for robot-assisted radical prostatectomy and partial nephrectomy: urologists’ and patients’ perception. World J Urol 2018;36:201-7.

5. Veneziano D, Amparore D, Cacciamani G, Porpiglia F. Uro-technology; SoMe Working Group of the Young Academic Urologists Working Party of the European Association of Urology; European Section of Uro-technology. Climbing over the barriers of current imaging technology in urology. Eur Urol 2020;77:142-3.

6. Ukimura O, Aron M, Nakamoto M, et al. Three-dimensional surgical navigation model with TilePro display during robot-assisted radical prostatectomy. J Endourol 2014;28:625-30.

7. Antonelli A, Veccia A, Palumbo C, et al. Holographic reconstructions for preoperative planning before partial nephrectomy: a head-to-head comparison with standard CT scan. Urol Int 2019;102:212-7.

8. Tang SL, Kwoh CK, Teo MY, Sing NW, Ling KV. Augmented reality systems for medical applications. IEEE Eng Med Biol Mag 1998;17:49-58.

9. Ukimura O, Gill IS. Augmented reality for computer- assisted image-guided minimally invasive urology. Interv Ultrason Urol ;2009:179-184.

10. Thompson S, Penney G, Billia M, Challacombe B, Hawkes D, Dasgupta P. Design and evaluation of an image-guidance system for robot-assisted radical prostatectomy. BJU Int 2013;111:1081-90.

11. Porpiglia F, Fiori C, Checcucci E, Amparore D, Bertolo R. Augmented reality robot-assisted radical prostatectomy: preliminary experience. Urology 2018;115:184.

12. Porpiglia F, Checcucci E, Amparore D, et al. Augmented-reality robot-assisted radical prostatectomy using hyper-accuracy three-dimensional reconstruction (HA3D™) technology: a radiological and pathological study. BJU Int 2019;123:834-45.

13. Porpiglia F, Checcucci E, Amparore D, Manfredi M, Massa F, Piazzolla P, et al. Three-dimensional elastic augmented-reality robot-assisted radical prostatectomy using hyperaccuracy three-dimensional reconstruction technology: a step further in the identification of capsular involvement. Eur Urol 2019;76:505-14.

14. Schiavina R, Bianchi L, Lodi S, et al. Real-time augmented reality three-dimensional guided robotic radical prostatectomy: preliminary experience and evaluation of the impact on surgical planning. Eur Urol Focus 2021;7:1260-7.

15. Wake N, Bjurlin MA, Rostami P, Chandarana H, Huang WC. Three-dimensional printing and augmented reality: enhanced precision for robotic assisted partial nephrectomy. Urology 2018;116:227-8.

16. Porpiglia F, Checcucci E, Amparore D, et al. Three-dimensional augmented reality robot-assisted partial nephrectomy in case of complex tumours (PADUA ≥10): a new intraoperative tool overcoming the ultrasound guidance. Eur Urol 2020;78:229-38.

17. Schiavina R, Bianchi L, Chessa F, et al. Augmented reality to guide selective clamping and tumor dissection during robot-assisted partial nephrectomy: a preliminary experience. Clin Genitourin Cancer 2021;19:e149-55.

18. Kobayashi S, Cho B, Huaulmé A, et al. Assessment of surgical skills by using surgical navigation in robot-assisted partial nephrectomy. Int J Comput Assist Radiol Surg 2019;14:1449-59.

19. Kobayashi S, Cho B, Mutaguchi J, et al. Surgical navigation improves renal parenchyma volume preservation in robot-assisted partial nephrectomy: a propensity score matched comparative analysis. J Urol 2020;204:149-56.

20. Michiels C, Khene ZE, Prudhomme T, et al. 3D-Image guided robotic-assisted partial nephrectomy: a multi-institutional propensity score-matched analysis (UroCCR study 51). World J Urol 2021; doi: 10.1007/s00345-021-03645-1.

21. Kavoussi NL, Pitt B, Ferguson JM, et al. Accuracy of touch-based registration during robotic image-guided partial nephrectomy before and after tumor resection in validated phantoms. J Endourol 2021;35:362-8.

22. Amparore D, Checcucci E, Piazzolla P, et al. Indocyanine green drives computer vision based 3d augmented reality robot assisted partial nephrectomy: the beginning of “automatic” overlapping era. Urology 2022;164:e312-6.

23. Checcucci E, Autorino R, Cacciamani GE, et al. Uro-technology and SoMe Working Group of the Young Academic Urologists Working Party of the European Association of Urology. Artificial intelligence and neural networks in urology: current clinical applications. Minerva Urol Nefrol 2020;72:49-57.

24. Houshyar R, Glavis-Bloom J, Bui TL, et al. Outcomes of artificial intelligence volumetric assessment of kidneys and renal tumors for preoperative assessment of nephron-sparing interventions. J Endourol 2021;35:1411-8.

25. Vezzetti E, Marcolin F, Tornincasa S, Ulrich L, Dagnes N. 3D geometry-based automatic landmark localization in presence of facial occlusions. Multimed Tools Appl 2018;77:14177-205.

26. Bertolo R, Hung A, Porpiglia F, Bove P, Schleicher M, Dasgupta P. Systematic review of augmented reality in urological interventions: the evidences of an impact on surgical outcomes are yet to come. World J Urol 2020;38:2167-76.

Cite This Article

Export citation file: BibTeX | RIS

OAE Style

Manfredi M, Piramide F, Amparore D, Burgio M, Busacca G, Colombo M, Meziere J, Granato S, Piana A, Cillis SD, Checcucci E, Fiori C, Porpiglia F. Augmented reality: the smart way to guide robotic urologic surgery. Mini-invasive Surg 2022;6:40. http://dx.doi.org/10.20517/2574-1225.2022.37

AMA Style

Manfredi M, Piramide F, Amparore D, Burgio M, Busacca G, Colombo M, Meziere J, Granato S, Piana A, Cillis SD, Checcucci E, Fiori C, Porpiglia F. Augmented reality: the smart way to guide robotic urologic surgery. Mini-invasive Surgery. 2022; 6: 40. http://dx.doi.org/10.20517/2574-1225.2022.37

Chicago/Turabian Style

Manfredi, Matteo, Federico Piramide, Daniele Amparore, Mariano Burgio, Giovanni Busacca, Marco Colombo, Juliette Meziere, Stefano Granato, Alberto Piana, Sabrina De Cillis, Enrico Checcucci, Cristian Fiori, Francesco Porpiglia. 2022. "Augmented reality: the smart way to guide robotic urologic surgery" Mini-invasive Surgery. 6: 40. http://dx.doi.org/10.20517/2574-1225.2022.37

ACS Style

Manfredi, M.; Piramide F.; Amparore D.; Burgio M.; Busacca G.; Colombo M.; Meziere J.; Granato S.; Piana A.; Cillis SD.; Checcucci E.; Fiori C.; Porpiglia F. Augmented reality: the smart way to guide robotic urologic surgery. Mini-invasive. Surg. 2022, 6, 40. http://dx.doi.org/10.20517/2574-1225.2022.37

About This Article

Copyright

Data & Comments

Data

Cite This Article 12 clicks

Cite This Article 12 clicks

Comments

Comments must be written in English. Spam, offensive content, impersonation, and private information will not be permitted. If any comment is reported and identified as inappropriate content by OAE staff, the comment will be removed without notice. If you have any queries or need any help, please contact us at support@oaepublish.com.